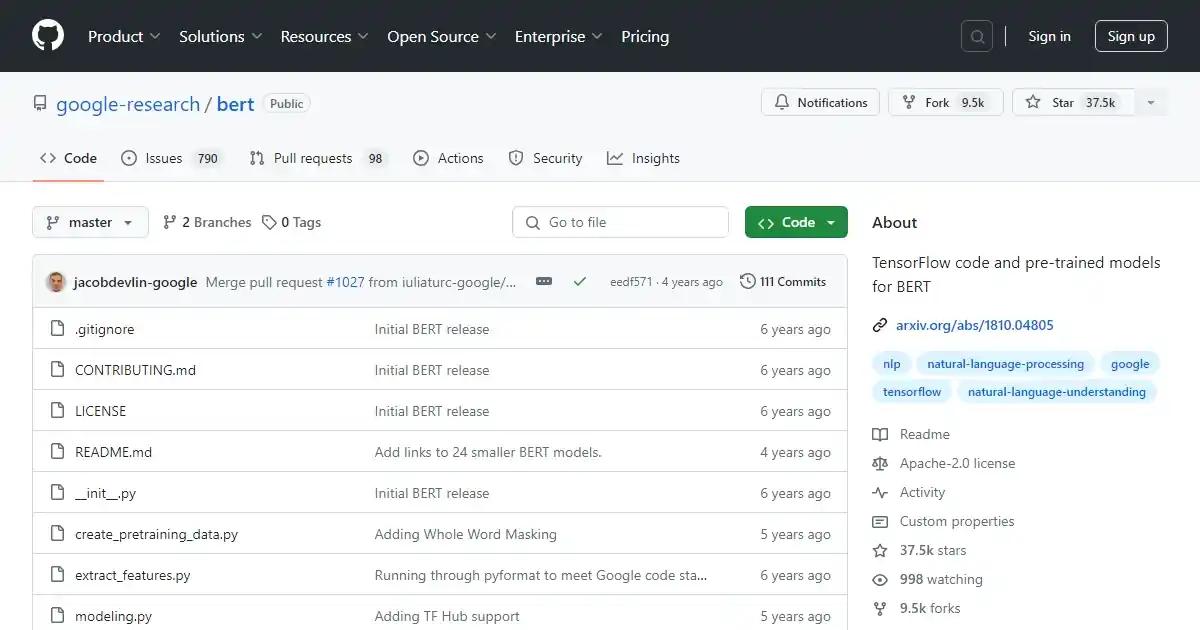

BERT

BERT (Bidirectional Encoder Representations from Transformers) is a language representation model.

January 3rd, 2026

About BERT

BERT (Bidirectional Encoder Representations from Transformers) is a language representation model introduced by Google Research. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers. This allows BERT to capture the relationships between words in a more comprehensive and contextually aware manner. The pre-trained BERT model can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of natural language processing (NLP) tasks, such as text classification, named entity recognition, question answering, and language translation.

Key Features

- Pre-trains deep bidirectional representations from unlabeled text.

- Considers both left and right context in all layers.

- Captures nuanced relationships between words.

- Produces high-quality contextualized word embeddings.

- Allows fine-tuning for specific NLP tasks.

Use Cases

- Text classification.

- Named entity recognition.

- Question answering.

- Language translation.

Loading reviews...