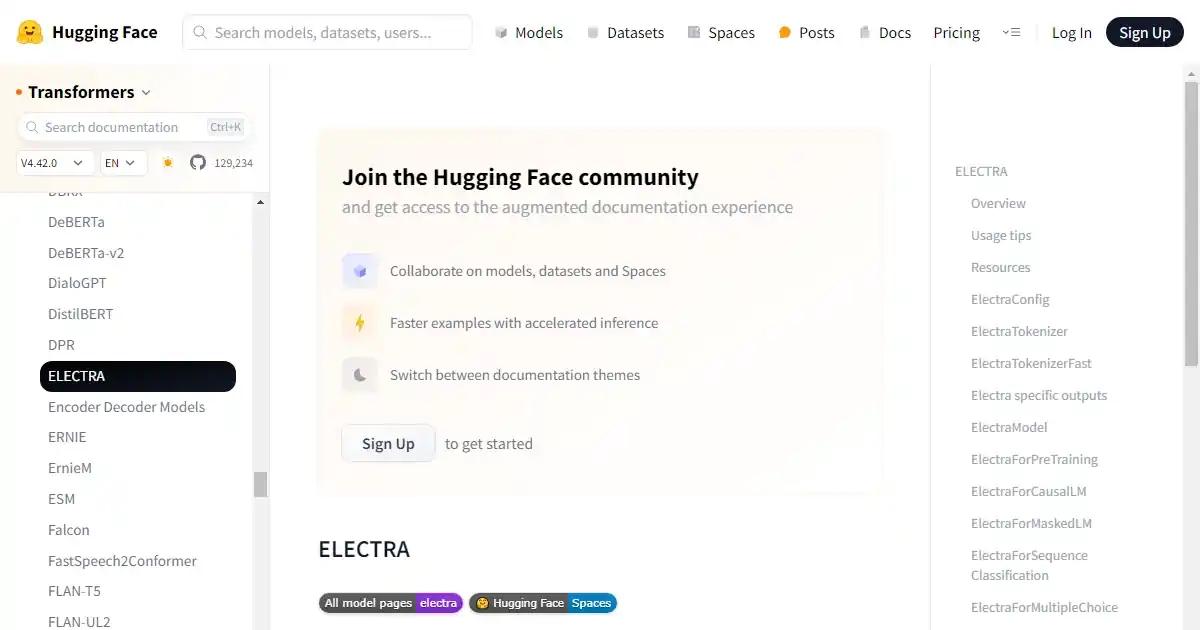

Electra

The ELECTRA model is a new pre-training approach for text encoders.

Pricing

Free

Tool Info

Rating: N/A (0 reviews)

Date Added: April 29, 2024

Categories

AI Chatbots

What is Electra?

The ELECTRA model was proposed in the paper ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators. ELECTRA is a new pretraining approach which trains two transformer models: the generator and the discriminator. The generator's role is to replace tokens in a sequence, and is therefore trained as a masked language model.

Key Features and Benefits

- Pretraining approach for text encoders.

- Trains generator and discriminator models.

- Generator replaces tokens in a sequence.

- Trained as a masked language model.

Use Cases

- Text generation.

- Language understanding.

- Text classification.

Loading reviews...