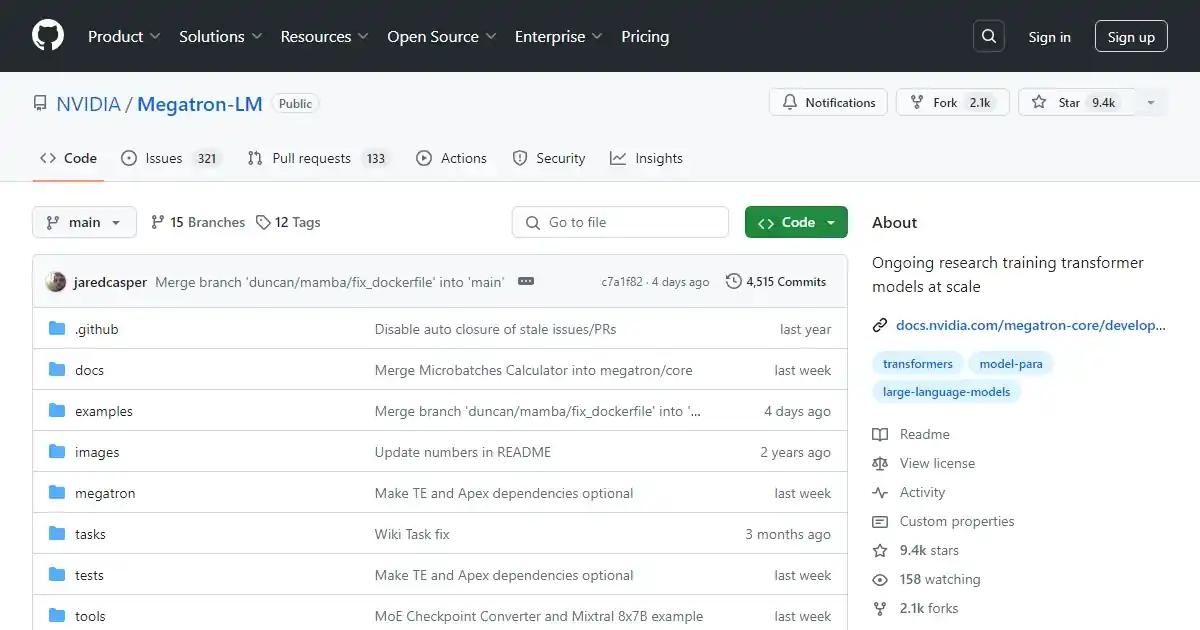

Megatron LM

A high-performance transformer model for large-scale language model training.

Pricing

New Features

Tool Info

Rating: N/A (0 reviews)

Date Added: October 26, 2023

Categories

What is Megatron LM?

Megatron is a high-performance transformer model available in three iterations. It facilitates the training of large transformer language models at a grand scale, making it a valuable asset for numerous applications. Megatron incorporates model-parallel techniques for tensor, sequence, and pipeline processing, and embraces mixed precision to optimize the utilization of hardware resources for more efficient performance. Megatron's codebase is well-equipped to efficiently train massive language models boasting hundreds of billions of parameters, exhibiting scalability across various GPU setups and model sizes.

Key Features and Benefits

- Efficient Model Parallelism: Megatron incorporates model-parallel techniques for tensor, sequence, and pipeline processing, enabling smooth and scalable model training. This efficiency is particularly beneficial for training large transformer models like GPT, BERT, and T5.

- Mixed Precision: Megatron embraces mixed precision to optimize the utilization of hardware resources and enhance the training of large-scale language models, resulting in improved performance.

Use Cases

- Large-scale language model training: Megatron's efficient model parallelism and mixed precision make it an ideal tool for training large transformer language models like GPT, BERT, and T5. This can be useful for researchers, data scientists, and developers working on natural language processing projects.

- Biomedical domain language models: Megatron has been used in the development of BioMegatron, which advances language models in the biomedical domain. This can be useful for researchers and developers working in the healthcare industry.

- Open-domain question answering: Megatron has been used in the end-to-end training of neural retrievers for open-domain question answering. This can be useful for developers working on chatbots or virtual assistants.

- Conversational agents: Megatron has been used in the development of local knowledge-powered conversational agents and multi-actor generative dialog modeling. This can be useful for developers working on chatbots or virtual assistants.

- Reading comprehension: Megatron has been used in advancements in the RACE reading comprehension dataset leaderboard and training question answering models from synthetic data. This can be useful for researchers and developers working on natural language processing projects.