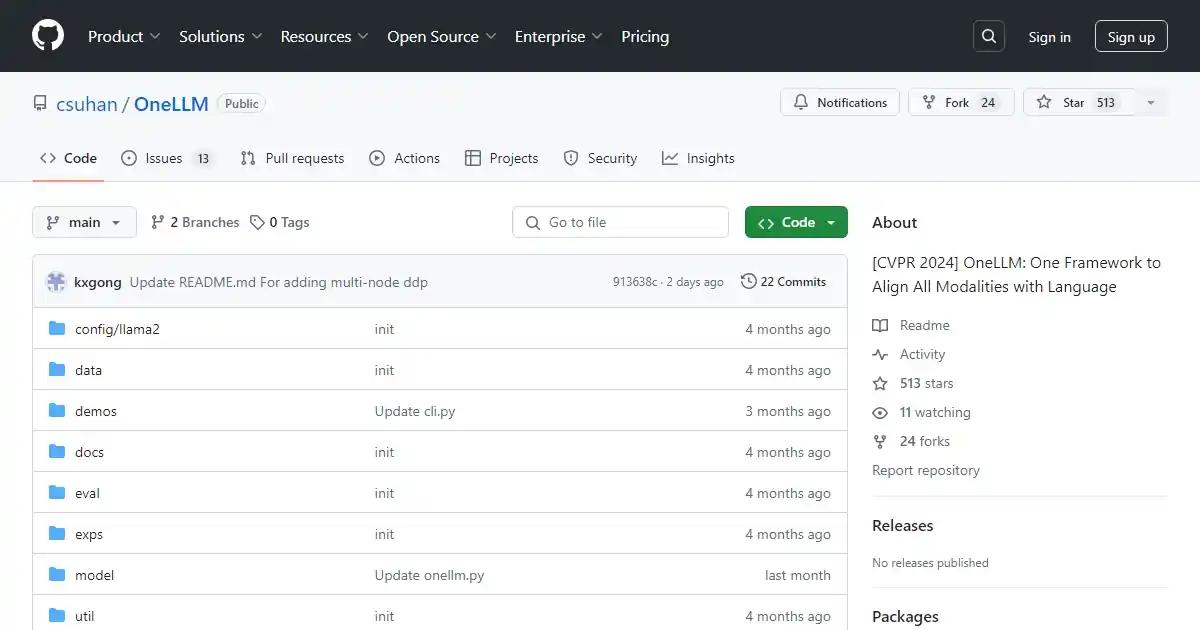

OneLLM

One Framework to Align All Modalities with Language

Pricing

Tool Info

Rating: N/A (0 reviews)

Date Added: April 15, 2024

Categories

What is OneLLM?

OneLLM is a framework presented in the paper titled 'OneLLM: One Framework to Align All Modalities with Language'. It is designed to align multiple modalities with language using a unified framework. The framework consists of modality tokenizers, a universal encoder, a universal projection module (UPM), and a language-like model (LLM). By leveraging these components, OneLLM enables the alignment of various modalities such as images, videos, depth/normal maps, etc., with textual information. It is built on the idea of multi-modal language modeling and aims to integrate different modalities for tasks like image captioning, visual question answering, and more.

Key Features and Benefits

- Modality tokenizers for transforming input signals into tokens.

- Universal encoder for representing input modalities in a common embedding space.

- Universal projection module (UPM) for mapping the modalities to the language space.

- Language-like model (LLM) for generating textual descriptions from the aligned modalities.

Use Cases

- Image captioning: Generating captions for images.

- Visual question answering: Answering questions based on visual content.

- Multi-modal sentiment analysis: Analyzing sentiments from combined textual and visual information.

- Cross-modal retrieval: Retrieving relevant information across different modalities.