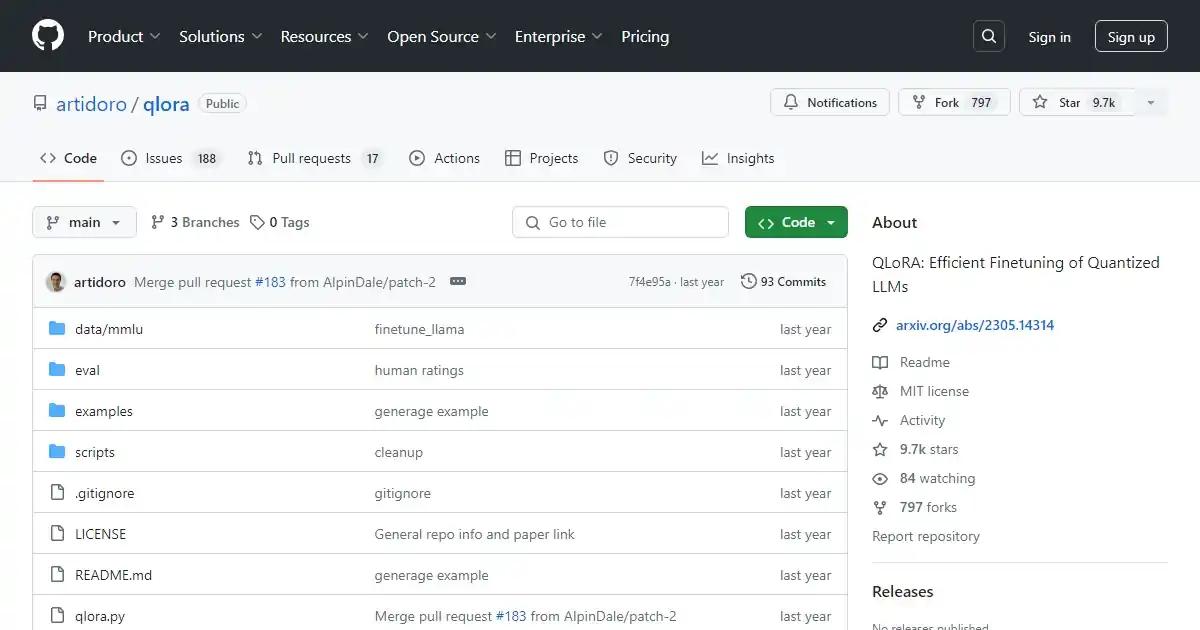

QLoRA

Efficient Finetuning of Quantized LLMs

What is QLoRA?

QLoRA is an efficient finetuning approach that reduces memory usage enough to finetune a 65B parameter model on a single 48GB GPU while preserving full 16-bit finetuning task performance. It backpropagates gradients through a frozen, 4-bit quantized pretrained language model into Low Rank Adapters (LoRA).

Key Features and Benefits

- Efficient finetuning of quantized language models.

- Reduces memory usage for finetuning large models.

- Preserves task performance during finetuning.

- Uses 4-bit quantized pretrained language models.

Use Cases

- Finetuning large language models with limited GPU memory.

- Improving task performance during finetuning.

- Efficient training of language models.

Loading reviews...